Data Ingestion

Contents

Data Ingestion¶

What is Data Ingestion ?¶

Data ingestion is the process of obtaining and importing data for immediate use or storage in a database. To ingest something is to “take something in or absorb something.” https://whatis.techtarget.com/definition/data-ingestion

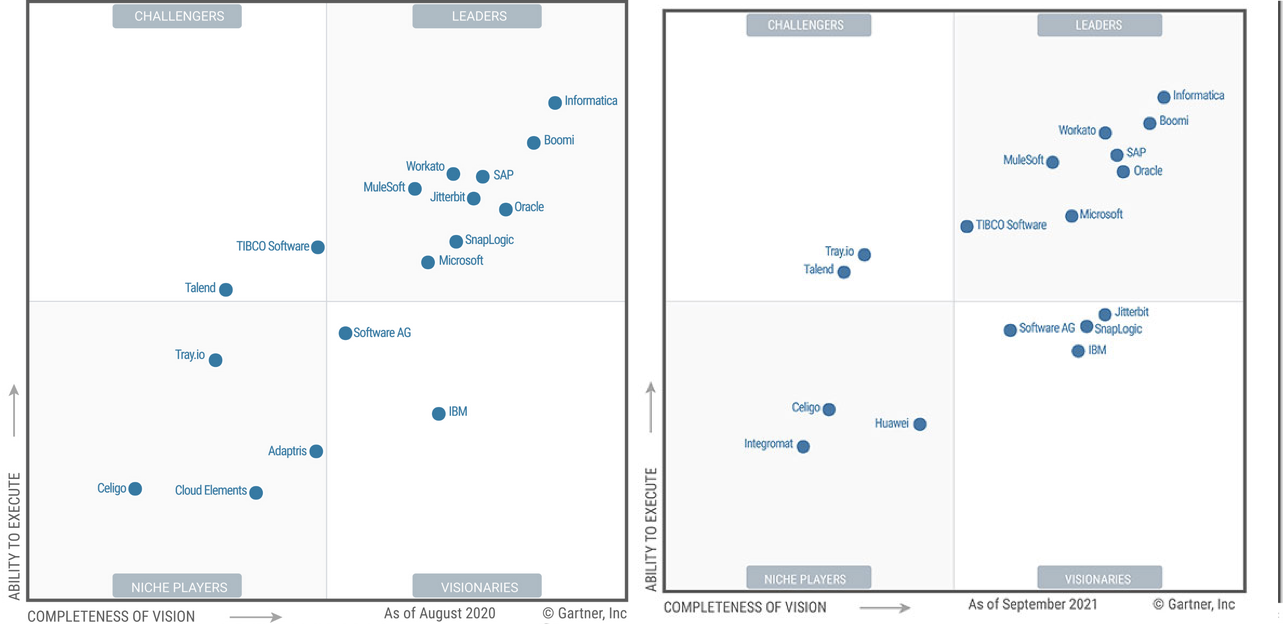

A Market Perspective¶

Magic Quadrant for Enterprise Integration Platform as a Service¶

September 2020-2021

Informatica¶

Cloud Mass Ingestion Efficiently ingest streaming big data, databases, and files with cloud-based services

https://www.informatica.com/it/products/cloud-integration/ingestion-at-scale.html

Talend¶

Last month Stitch became part of Talend. Talend is a global open source big data and cloud integration software company whose mission is “to make your data better, more trustworthy, and more available to drive business value.” That maps naturally to Stitch’s mission “to inspire and empower data-driven people.”

Talend offers a wide range of products to complement Stitch’s frictionless SaaS ETL platform.

Analysis-ready data at your fingertips¶

Stitch rapidly moves data from 130+ sources into a data warehouse so you can get to answers faster, no coding required.

Data Ingestion vs Data Integration¶

Data ingestion is similar to, but distinct from, the concept of data integration, which seeks to integrate multiple data sources into a cohesive whole. With data integration, the sources may be entirely within your own systems; on the other hand, data ingestion suggests that at least part of the data is pulled from another location (e.g. a website, SaaS application, or external database).

Big Data Ingestion¶

https://www.xenonstack.com/blog/ingestion-processing-data-for-big-data-iot-solutions

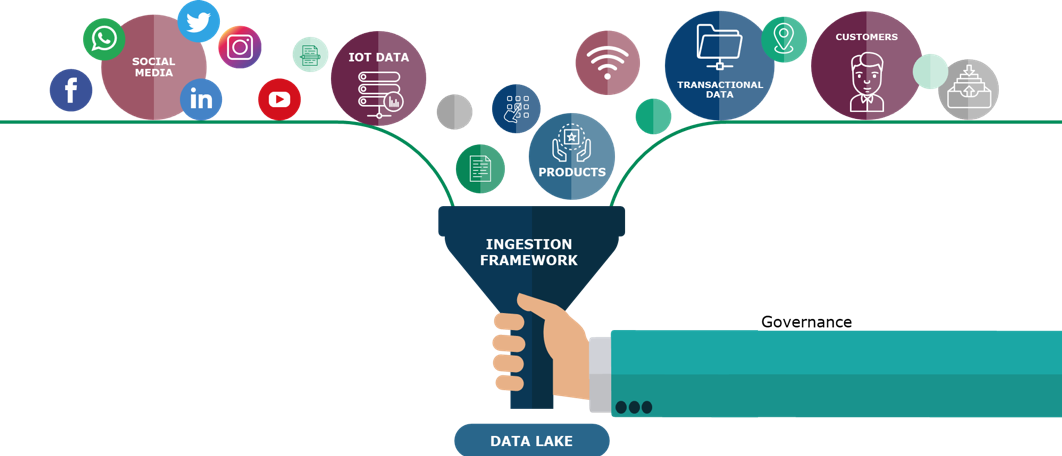

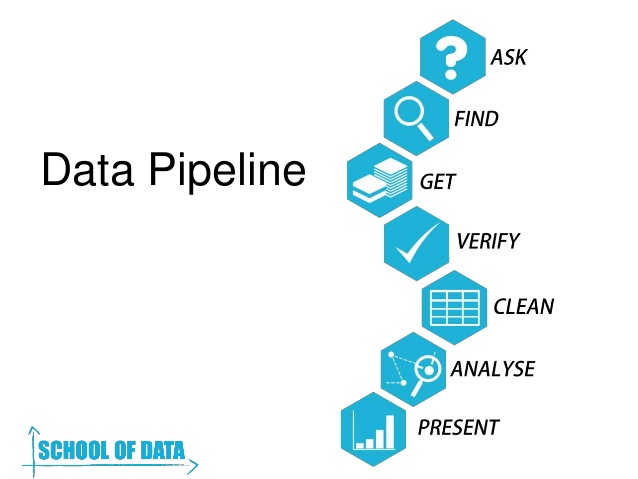

Data Ingestion is the beginning of Data Pipeline where it obtains or import data for immediate use.¶

Data can be streamed in real time or ingested in batches, When data is ingested in real time then, as soon as data arrives it is ingested immediately.

Effective Data Ingestion process begins by¶

prioritizing data sources¶

validating individual files¶

routing data items to the correct destination¶

Data Velocity¶

Data Velocity deals with the speed at which data flows in from different sources like machines, networks, human interaction, media sites, social media. The flow of data can be massive or continuous.

Data Size¶

Data size implies enormous volume of data. Data is generated by different sources that may increase timely.

Data Frequency (Batch, Real-Time)¶

Data can be processed in real time or batch, in real time processing as data received on same time, it further proceeds but in batch time data is stored in batches, fixed at some time interval and then further moved.

Data Format (Structured, Semi-Structured, Unstructured)¶

Data can be in different formats, mostly it can be structured format i.e. tabular one or unstructured format i.e. images, audios, videos or semi-structured i.e. JSON files, CSS files etc.

Data Ingestion Types¶

Batch/Micro Batch¶

Batch Data Ingestion is about collect, upload and process data in steps.

Data are cumulated for an amount of time (or size) and then are ready to be processed.

Can be any kind of data: log files, databases, structured or unstructured, text or binary.

Can be big or small.

Real Time / Streaming¶

Stream data ingestion (or real time) is about sending, receiving and process a continuous flow of data

Meanwhile data are ingested they are make available for (more then one) processing system

Real Time Examples¶

https://www.octoboard.com/it/real-time-business-dashboard-live

Applications of Stream Processing¶

complex event processing¶

https://en.wikipedia.org/wiki/Complex_event_processing

Suppose we have

A monitoring system may for instance receive the following three from the same source:

church bells ringing.

the appearance of a man in a tuxedo with a woman in a flowing white gown.

rice flying through the air

From these events the monitoring system may infer a complex event: a wedding

Data Ingestion Tools¶

https://www.predictiveanalyticstoday.com/data-ingestion-tools/